Q&A Chatbot with LLMs via Retrieval Augmented Generation for Hallucination Mitigation

Motivation

Q&A systems with large language models (LLMs) have shown remarkable performance in generating human-like responses. However, LLMs often suffer from hallucination and generate plausible but incorrect information.

Introduction

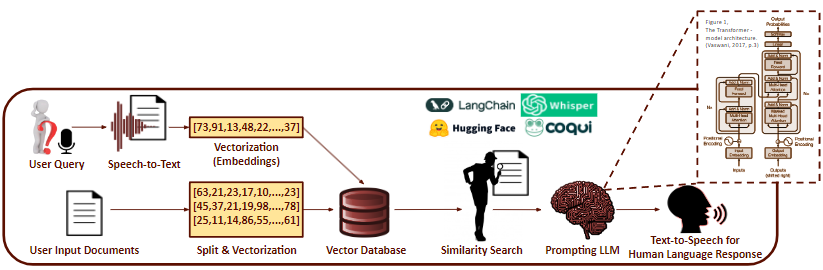

To address this issue, we are developing a Q&A chatbot system that leverages retrieval-augmented generation (RAG). RAG allows users to vectorize and store documents (we are using PDF format) in a grounded database, and conducts similarity and semantic search to retrieve the most relevant information when a user asks a question. The retrieved information is then converted into a human-like response by the LLM.

Research Challenges

We are in the research phase of this project and are currently focusing on the following challenges:

- Choosing an LLM to use for the human-like text generation part of the system.

- Choosing a model to use as an embedding model to vectorize the documents.

- Generalizing the retrieval system to handle different types of documents.

- Further mitigating hallucination in the LLM.

- Finding evaluation metrics to measure the performance of the retrieval system.

- Fine-tuning the LLM to generate human-like responses if performance is not satisfactory.

- Optimizing the inference time and memory usage of the retrieval system.

- Developing a user-friendly interface for the web application.

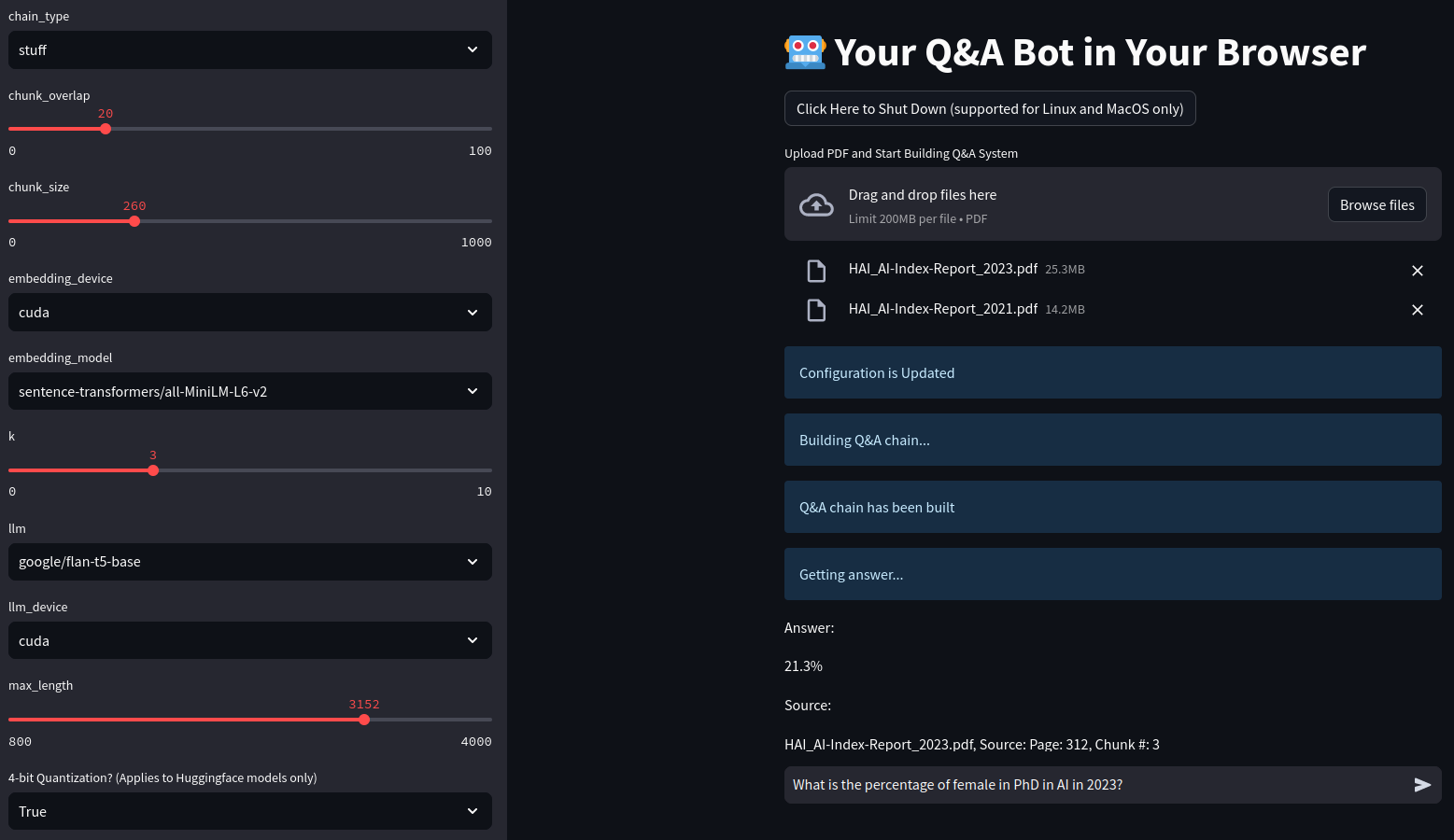

Web Application

We are currently developing a web application that allows users to upload PDF documents and ask questions. The application is still in the basic stage, but we are planning to refine inference time, memory usage, and user interface.